More DirectX 10.1 Improvements:

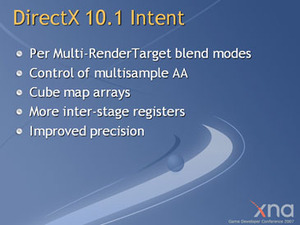

It's fair to say that DirectX 10 was a big step forwards, as it defined a much tighter set of boundaries in which modern GPUs could operate in and greatly reduced the number of optional features. DirectX 10.1 aims to take the API even further down this path, thus making GPU behaviour more predictable for developers trying to implement new effects and features.The new features include new texture formats, higher precision requirements and more stringent MSAA requirements, including giving developers access to create their own custom anti-aliasing filters. We'll come to each of those in due course, but let's have a look at what's changed and at the same time we'll talk about the more stringent requirements too.

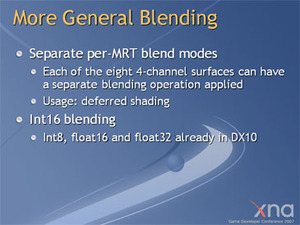

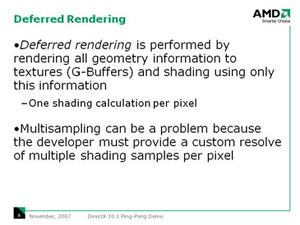

Deferred rendering has been around for ages and it’s used in games like Ghost Recon: Advanced Warfighter, Rainbow Six: Vegas, Gears of War, BioShock and Unreal Tournament III. It’s a technique that’s used to render multiple lighting passes into multiple render targets (MRTs). Lighting in the scene is then calculated by rendering all geometry information to textures and then shading is done using the information gathered from the calculation.

With DirectX 10.1, Microsoft has improved the access to multiple render targets by allowing pixel shaders to output to more than one render target at once. Additionally Microsoft has introduced a new INT16 blending, adding to the currently supported blending formats and each buffer (or MRT) can apply its own blending operation, independent of other buffers that are written to by the pixel shader.

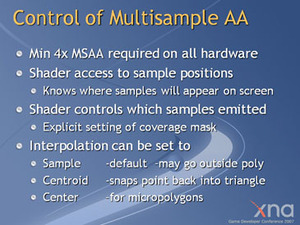

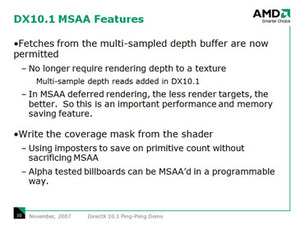

That’s not the only improvement to deferred rendering though, because DirectX 10.1 makes both multi-sample buffer reading and writing and pixel coverage masking compulsory features.

These enable the developer to control depth and colour samples in a multi-sample buffer to be accessed from the pixel shader, thus allowing the developer to create custom anti-aliasing filters like the ones that AMD introduced with the Radeon HD 2900 XT. On top of that, these features bring improved compatibility and performance when anti-aliasing is enabled in conjunction with deferred rendering and HDR rendering techniques.

DirectX 10.1 introduces a minimum requirement for anti-aliasing support too. In order to be DirectX 10.1 compliant, the GPU must support at least four samples per pixel (4xMSAA) on both 32-bit and 64-bit pixel formats. Additionally, Microsoft has standardised a bunch of anti-aliasing sample patterns - this was something that used to be determined by the hardware vendor and could often lead to poor support for anti-aliasing in games, as in order to support the major hardware vendors, multiple sample patterns would have to be implemented into the game.

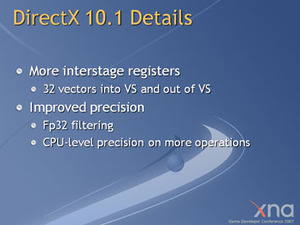

Microsoft has introduced an FP32 texture format with DX10.1 and the specification requires GPUs to be able to filter textures that utilise the new format. Texturing performance has also been improved with two new instructions: namely Gather4 and LOD instruction. The first new feature allows the developer to fetch a 2x2 block of unfiltered textures instead of doing a single bilinear filtered texture lookup. LOD instruction is a new shader instruction which returns the level of detail required for a filtered texture lookup, essentially allowing developers to create custom texture filtering algorithms to improve both performance and image quality.

Finally, Microsoft has also increased the number of inter-stage registers for the vertex shader. In DirectX 10.0, there were 16 128-bit vector inputs and 128-bit vector outputs per shader; DirectX 10.1 doubles this to 32 both into and out of the vertex shader. This will go a long way to help improving performance in some of the more complex shader instructions that are likely to be used in future games.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.